CV Chat - AWS Bedrock integration

February 25, 2025

Introduction

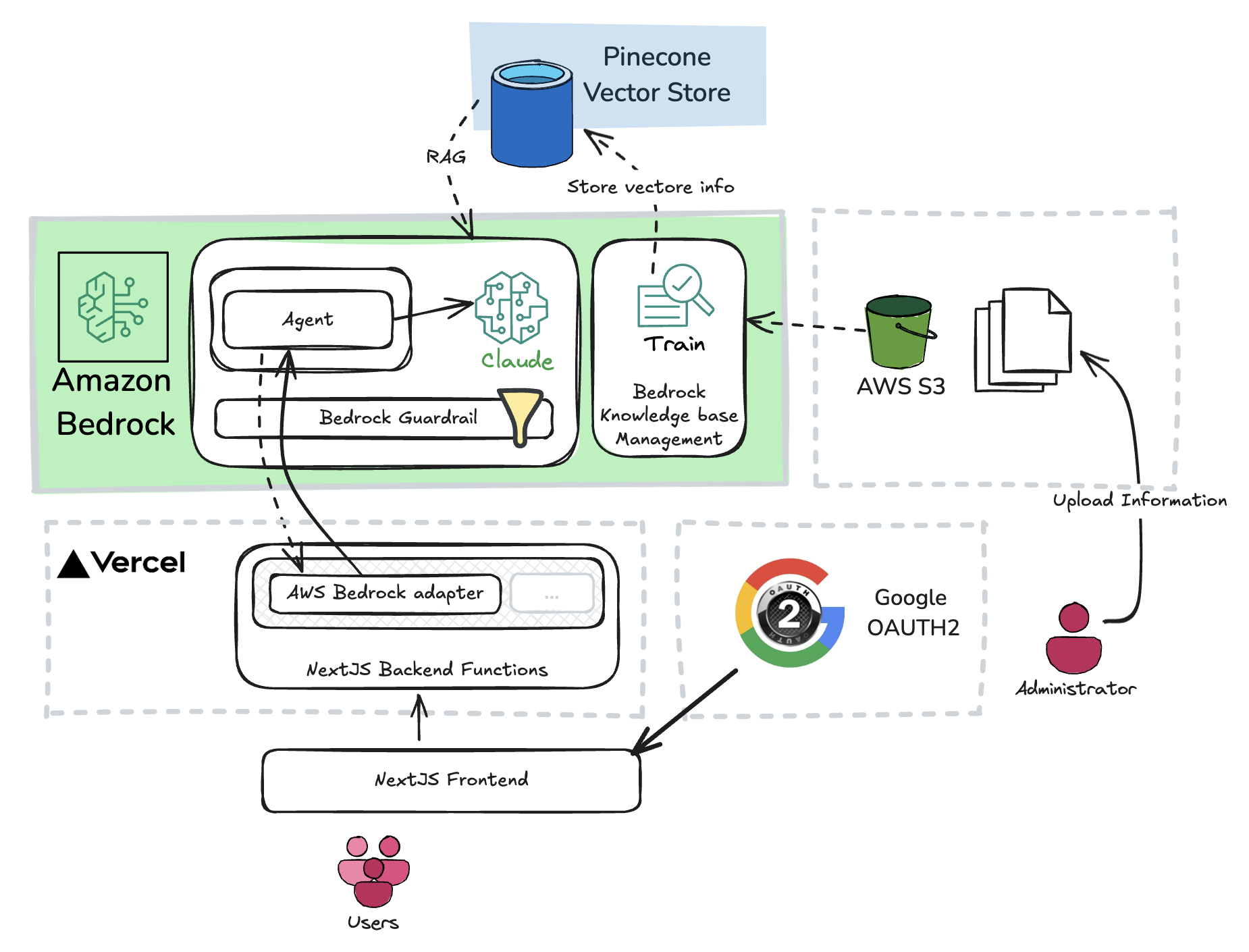

If you’ve already explored the main journey page detailing the evolution of CV Chat, this document describes how the system operates using AWS Bedrock “Agents” for language processing. AWS Bedrock offers a more open and modular approach, allowing you to select from various underlying models and integrate with broader AWS services. Below, you’ll find key components, a high-level workflow, and major differences from the OpenAI setup.

Core System Components

- Vercel: Hosts the Next.js frontend and backend, providing serverless functions and smooth deployments.

- Next.js Frontend & Backend:Powers the user interface and handles API access and authorization.

- Google OAuth2: Authenticates users for basic identification, which helps restrict access and manage the number of sessions.

- Vector Store (Pinecone or AWS Native): Unlike the OpenAI implementation with a built-in vector store, here you can choose from multiple options. Pinecone offers a robust free tier, while AWS-native services provide tighter ecosystem integration.

- AWS Bedrock Agents: The AWS equivalent of “Assistants,” offering a flexible API that can interface with various LLMs such as Claude 3.0.

- Adapter Pattern: Standardizes communication with different AI platforms by handling provider-specific API details under a single interface.

- AWS S3 Bucket: Stores and administers CV documents, making it easy to integrate with existing AWS-based infrastructures.

- Administrator: Responsible for uploading and managing CV documents in the S3 bucket.

- Users: End users who interact with the Next.js frontend.

High-Level Workflow

Data Preparation

- Administrators store CV documents in an S3 bucket, providing a secure and scalable location.

- CVs are chunked into sections (work history, projects) and turned into vector embeddings.

- Each section is enriched with metadata for more precise retrieval.

User Query Handling (Frontend)

- The Next.js frontend performs basic UI validations when a user submits a query.

- The backend enforces API usage limits and confirms user identification via Google OAuth2 to prevent excessive costs.

AI Invocation via the Adapter

- The adapter receives the user’s query and formats it for AWS Bedrock Agents.

- Administrators can choose from different LLMs within Bedrock. Claude 3.0 is currently available, but future releases may offer more advanced models.

- The adapter abstracts away provider details like endpoints and authentication keys.

Retrieval Augmented Generation (RAG)

- The system embeds the user’s query and searches Pinecone or an AWS-native vector store for the most relevant CV chunks.

- Relevant data is merged with the AI response, ensuring context-specific details are included.

Reconstruct Answer with AWS Bedrock Guardrails, Moderation, and Formatting

- AWS Bedrock Guardrails and moderation are available out of the box, making it more straightforward to maintain quality and policy compliance.

- The final response is formatted for clarity and displayed in the Next.js frontend chat interface.

#Design Decisions

More Open Platform

Bedrock allows you to pick from a variety of LLMs, offering greater flexibility than single-model solutions.

S3 for Document Storage

Storing CVs in S3 simplifies integration with other AWS services and existing infrastructures.

Guardrails and Moderation

Built-in safeguards in Bedrock can be enabled to automatically moderate outputs without additional API calls.

Claude 3.0 Support

At the time of writing, Claude 3.0 is available for agent configuration, though users may anticipate more advanced versions in future releases.

Where to Go Next

Refer to the main journey page for a broader explanation of the system's architecture and how it evolved. To compare this approach with another platform, see our [OpenAI-based solution]((/blog/cv-chat-architecture-openai). Whether you choose Pinecone or an AWS-native vector service, the ability to select your own model makes AWS Bedrock well-suited for enterprise environments. If you plan to implement streamed responses, note that each AWS Bedrock model may handle streaming differently, and certain configurations could present challenges for fully interactive, real-time output.